May 19, 2025

Israel uses AI for attacks in Gaza: from 50 targets per year to 100 per day

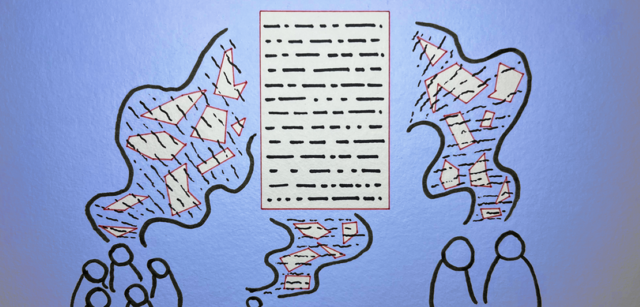

According to an article in Het Parool, Israel uses artificial intelligence (AI) to identify targets in Gaza faster, more accurately, and more frequently. This has led to a massive increase in the number of targeted attacks, with severe consequences for civilians.

Since the introduction of artificial intelligence (AI) in military operations in Gaza, the Israeli army has been able to identify and attack significantly more targets. Before AI, the military could identify around fifty targets per year. Now, that number is about one hundred per day, says Jessica Dorsey, assistant professor of international law at Utrecht University.

According to Dorsey, targets are selected using a point system, where, for example, men receive higher scores than women. Other factors, such as phone conversations with alleged Hamas fighters or participation in suspicious WhatsApp groups, may also be considered. However, this system is controversial because it remains unclear what criteria are used to include individuals on the target list.

The Israeli military uses various AI systems, including Habsora, Lavender, the Alchemist, and Where’s Daddy. These systems work together to identify potential targets and determine the necessary amount of ammunition to neutralize them. Despite the promise of precision, the use of AI has led to more destruction, Dorsey says. According to Gaza's Ministry of Health, more than 50,000 casualties have been reported, many of whom are children.

Dorsey emphasizes that AI in warfare should always follow the human-machine-human principle: a human initiates, AI assists, and a human makes the final decision. However, in practice, the Israeli army does not strictly adhere to this principle. For example, military operators sometimes have only twenty seconds to validate a target, which Dorsey considers insufficient for careful decision-making.

According to Dorsey, AI always reflects the biases of those who develop and train it. In this case, this means that the systems create a one-sided view of the enemy. “AI in the hands of the wrong party that does not respect the laws of war is primarily an effective killing machine,” she warns.

Vergelijkbaar >

Similar news items

August 22

AI outwrites bestselling fantasy authors in short story challenge

read more >

August 22

AI assistance may reduce doctors’ diagnostic sharpness

read more >

August 22

AI supports more diverse news exposure and healthier public debate

read more >