< Back to news

9 October 2023

Ethical AI applications: this remains human work

Set up a well-thought-out architecture for an AI system in one go and then assume you will have a good foundation for years to come? Forget it.

To offer an ethical AI application, it must be possible to monitor and adjust that system at any time. And yes, that remains human work. How best to approach that? The Ethics team of the Human-Focused AI Working Group has clear ideas on that.

AI ethics have been a topic of discussion for some time. Those were mostly philosophical discussions. Those explorations definitely helped to arrive at important moral values and principles. But it generally remained rather abstract. And when the generative AI hype erupted in early 2023, it quickly became clear that when it comes to ethics, good guardrails are not yet in place. For instance, AI bots have quite a tendency to 'hallucinate', revealing that their underlying language models are not yet good at distinguishing fact from fiction.

AI ethics have been a topic of discussion for some time. Those were mostly philosophical discussions. Those explorations definitely helped to arrive at important moral values and principles. But it generally remained rather abstract. And when the generative AI hype erupted in early 2023, it quickly became clear that when it comes to ethics, good guardrails are not yet in place. For instance, AI bots have quite a tendency to 'hallucinate', revealing that their underlying language models are not yet good at distinguishing fact from fiction.

Social unrest

The general public is currently experiencing the power and benefits of AI applications. At the same time, it is more than clear to everyone that that technology is not yet completely reliable. In addition, there are concerns about the fact that advanced AI applications are now available to anyone, including people with malicious intentions. And that causes social unrest, especially as developments in AI are currently accelerating. In Europe, regulations are indeed in the pipeline to minimise the potential risks of AI, but before that law is actually in place at national level, we are likely to be a few years down the line.

Lively debate

One advantage of recent developments, however, is that ethical application of AI is now really high on the agenda everywhere. At the Human-centred AI working group of the Dutch AI Coalition, they notice this too. The success of the AI Parade organised by that working group together with partners is a good example. This initiative to reach and involve the general public in the discussion about the social impact of AI via public libraries is proving to be a good way to share concerns and clear up misunderstandings about that technology.

Team Ethics

As generative AI applications tumble over each other, calls for ethical principles are ringing louder than ever. And the Ethics Team of the Working Group on Human-centred AI realises this all too well. The team consists of three experts who have been deeply concerned with the ethical side of AI for many years: Jeroen van den Hoven (professor of Technology and Ethics at TU Delft), Sophie Kuijt (Data & AI Ethics community leader at IBM) and Kolja Verhage (manager of Digital Ethics at Deloitte). The approach now being further developed by the team focuses on the practical side of the challenge of achieving ethical AI applications.

Systems perspective

"When it comes to ethical application of AI, everyone can very quickly write down all kinds of nice principles. But that is rather non-committal," Jeroen van den Hoven stresses. "What does it mean concretely? And what exactly needs to happen to actually make those fine words a reality? In doing so, it is good to realise that the focus should not only be on the algorithm and the quality of the available data. With digital ethics, it is important to take a systems perspective."

Read the full article on the Nederlandse AI Coalition's website (in Dutch).

Vergelijkbaar >

Similar news items

September 9

Multilingual organizations risk inconsistent AI responses

AI systems do not always give the same answers across languages. Research from CWI and partners shows that Dutch multinationals may unknowingly face risks, from HR to customer service and strategic decision-making.

read more >

September 9

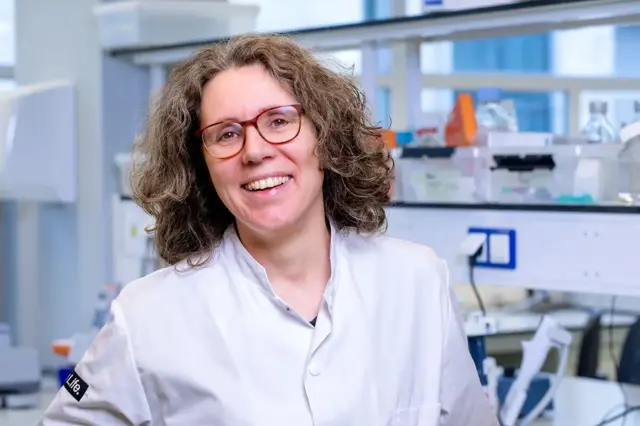

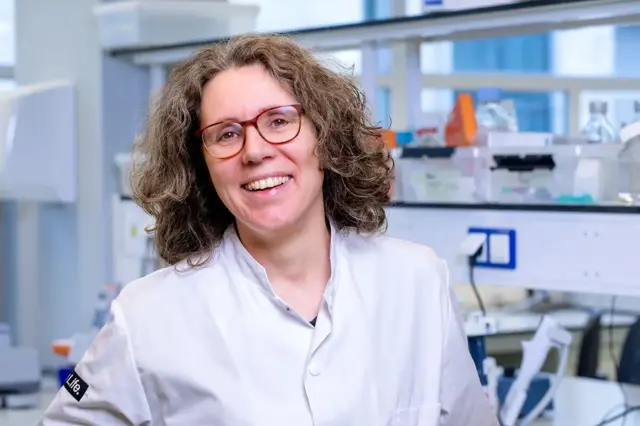

Making immunotherapy more effective with AI

Researchers at Sanquin have used an AI-based method to decode how immune cells regulate protein production. This breakthrough could strengthen immunotherapy and improve cancer treatments.

read more >

September 9

ERC Starting Grant for research on AI’s impact on labor markets and the welfare state

Political scientist Juliana Chueri (Vrije Universiteit Amsterdam) has received an ERC Starting Grant for her research into the political consequences of AI for labor markets and the welfare state.

read more >